The Data Vault: facts, fables, and truth

At the heart of the Data Vault methodology is an ingenious modeling technique for data warehouses. It was put together by Dan Linstedt at the U.S. Department of Defense in an already distant past. A Data Vault stores all incoming transactions in a hyper-normalized structure. Regardless of whether this data has integrity and accuracy: “100% of the data 100% of the time”. The Data Vault distinguishes between facts and the truth. But who is waiting for the facts? In this article, read the main advantages and disadvantages, find the four differences between a third normal form and a Data Vault, and finally arm yourself with the 10 irrefutable facts about Data Vaults.

Compliance and a Data Vault go hand in hand

As indicated, the Data Vault (DV) distinguishes between facts (raw perspective) and the truth (edited perspective). This can be useful to avoid losing any transactions or mutations. And it is sometimes a must from a compliance and legislative perspective. On all fields, you consistently keep history in the Data Vault. An ingenious construction of hub, link, and satellite tables provides flexibility in terms of storage.

Find the 4 differences between the third normal form and the Data Vault

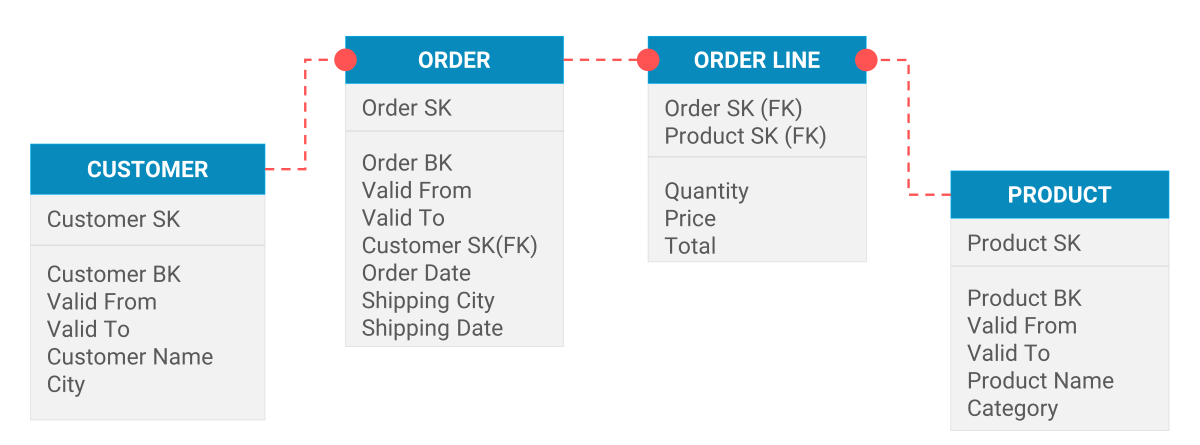

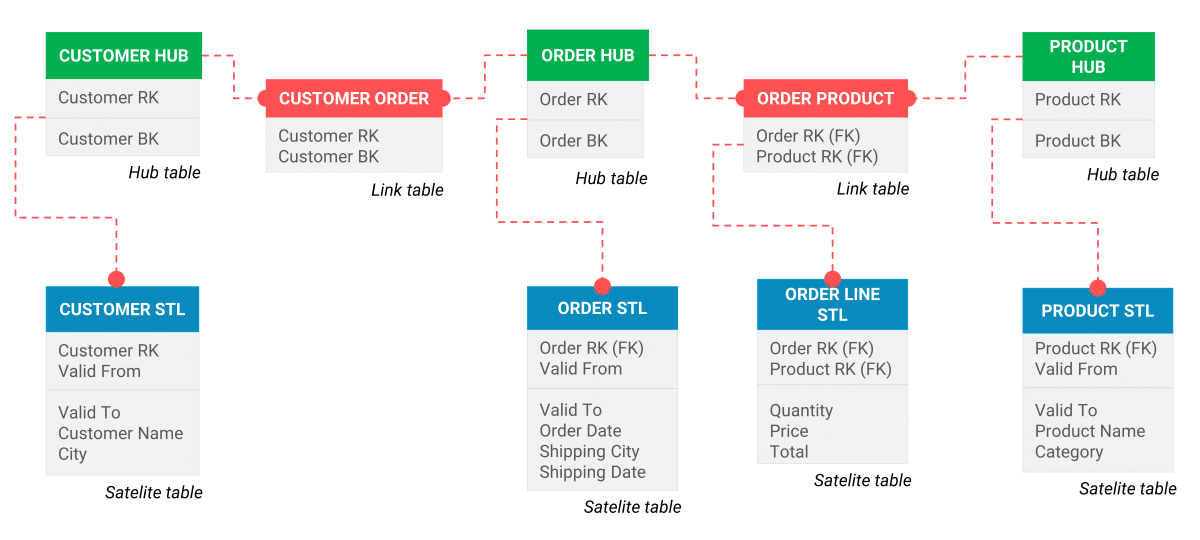

Data Vault modeling is vastly different from regular data modeling (3NF). Below you can see the differences. From four tables (Figure 1) you go to nine tables (Figure 2). So a big difference is: there’s more tables. What other differences do you see? Leave a comment with your answer.

Figure 1: a company’s orders modeled according to the third normal form (3NF)

Figure 1: a company’s orders modeled according to the third normal form (3NF)

Figure 2: example of a company’s orders modeled using the Data Vault methodology

Figure 2: example of a company’s orders modeled using the Data Vault methodology

How do you model the transformation from 3NF to DV?

- You split each master table (reference table such as customer) into a hub table and a satellite table. The keys come in the hub table. The descriptive fields come in one or more satellite tables.

- This creates flexibility: you can easily attach multiple satellite tables to the hub. Hence the name “hub”. This allows you to easily link customer data from other systems or external sources. This keeps the existing tables intact.

- Joins between two master tables are created with a link table. This creates flexibility in the type of relationship that data has with each other.

- You split each transaction table into a link table and a satellite table. The keys come in the link table. The transaction details come in the satellite table.

Example: suppose orders can suddenly be placed not only by companies but also by consumers. Then a few additional tables will suffice: Person Order Link (Person RK, Order RK), Person Hub (Person RK, ID (BK)), and Person Satellite (Person RK, first name, last name, etc.). The current Data Vault data model remains intact in this process. That is, there is no need to modify existing tables.

The 10 irrefutable facts about the Data Vault

- More tables for storage: data storage in a Data Vault requires more tables. At least twice as many tables are needed.

- More joins for data retrieval: more complex joins are needed to select the right data.

- Additional component: the Data Vault is an additional component in a mature DWH architecture. And that means extra work.

- End users may not access the Data Vault. The reason? It may contain incorrect or incomplete data.

- Three modeling techniques side by side: in the complete DWH architecture, three modeling techniques occur with the Data Vault: 3NF, Data Vault, and Dimensional (star schema, snowflake).

- Flexibility in data storage: the Data Vault provides flexibility in data storage in a number of ways.

- Freedom: particularly in the type of relationship entities can have with each other

- Additional flexibility: being able to easily add new sources and entities. And for this, you do not have to modify the existing structure.

- More data storage: even wrong or incomplete data is stored.

- Closed, secure environment: end users are not allowed to retrieve data from the Data Vault. After all, these may contain inaccuracies. So the Data Vault is secure as the name implies.

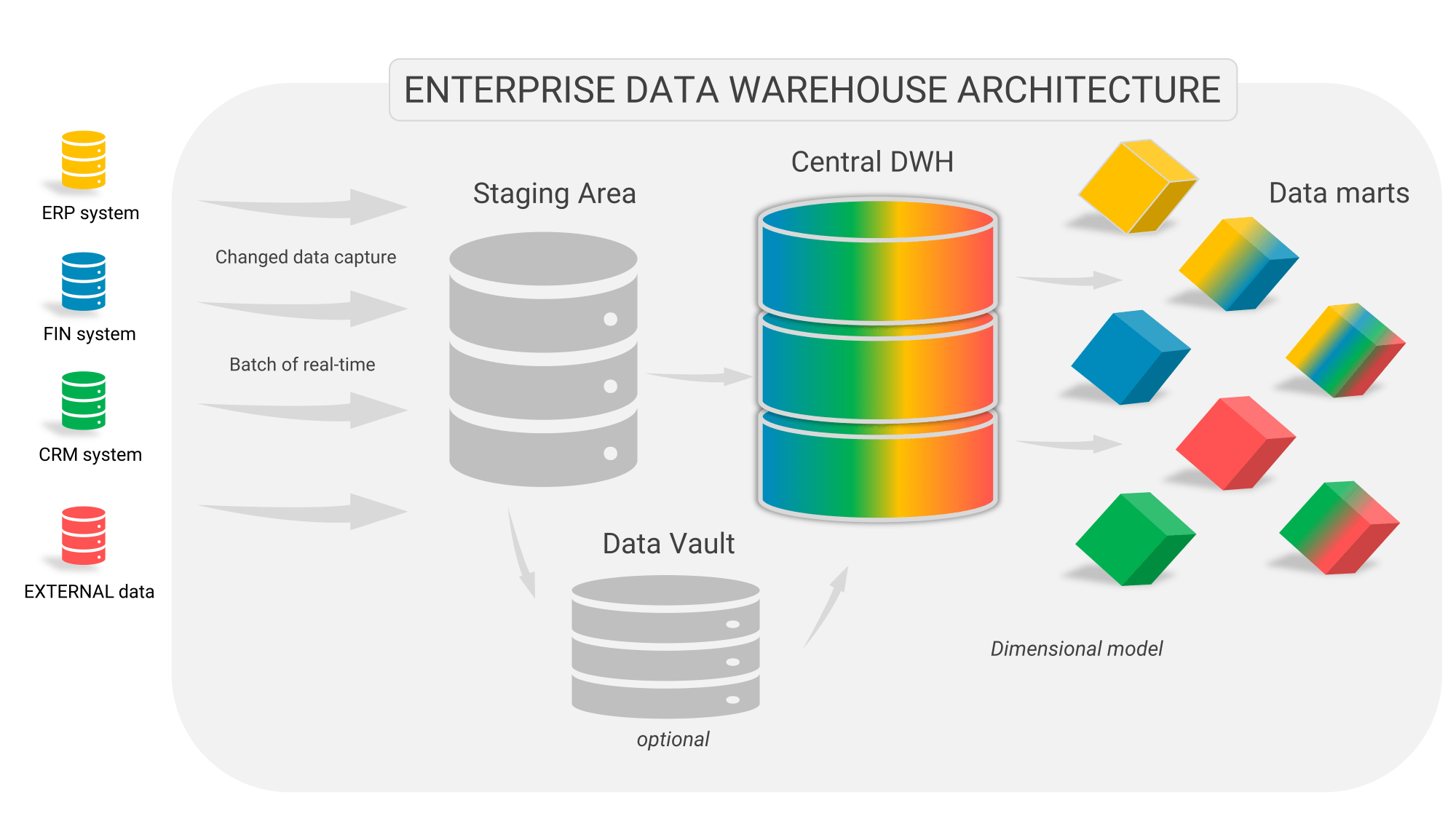

How do you design a Data Vault architecture?

A Data Vault can be applied as a Central Data Warehouse (CDW), but also as a pre-CDW or Persistent Staging Area (PSA). If you apply the Data Vault as a CDW, then it is not a mature DWH architecture. After all, you build data marts from the Data Vault and these have a dimensional structure.

Figure 3: The Data Vault route and the traditional Business Analytics route. This figure shows that implementing a Data Vault involves extra work and makes the architecture more complex.

Figure 3: The Data Vault route and the traditional Business Analytics route. This figure shows that implementing a Data Vault involves extra work and makes the architecture more complex.

For the detailed data, you then have to go back to the Data Vault. That one has a different structure and has no transformations or different transformations. Moreover, it may also contain incorrect data. So a drilldown on a Data Vault is also dangerous. Therefore, a Data Vault can never replace the CDW in a mature DWH architecture. You want one version of the truth and then it cannot be enforced. Or you can only do that at a very high cost. Because, the data warehouse bus, which enforces that, is no longer there.

One specific indicator sometimes does require multiple aggregation tables. Each data mart can, in theory, thereby create an entirely separate truth. Thus, a Data Vault can easily degenerate into a collection of separate data silos, just like in the pre-data warehouse era.

A mature DWH architecture

In a mature DWH architecture, a number of functions are performed:

- you test the data quality

- you build up a history

- you combine data

- you enforce consistency between data

For the latter, you think about consistency between aggregations and the details but also across aggregation tables.

In an Enterprise DWH architecture, you can basically choose 2 routes: the traditional route and the Data Vault route.

The advantages of the Data Vault

- The Data Vault’s data model is separate from the sources’ data model. Changes therein are less likely to impact the Data Vault’s data model.

- Flexibility: data storage is flexible and easily expandable. It’s easy to add sources without having to change the structure of the current tables of the Data Vault. Is this flexibility achievable in any other way? A Data Vault is pre-eminently the way to achieve this kind of flexibility.

- Reloadability: data from the Data Vault can always be used to reload the CDW/data marts, far back in time. Even in case source systems have a different structure in the meantime.

- Compliance: always be able to trace the data back, regardless of structure changes in source systems.

- Speed of loading: when there are many satellite tables per hub, you can load them in parallel. When the hardware supports parallel processing, data loading is much faster.

The disadvantages of the Data Vault

- More work: the Data Vault is more complex to create. In addition, you now have an extra component that you also have to maintain.

- More transformations: in the Data Vault, you normalize entities beyond the source. Afterwards, you have to “work back” to a dimensional data model.

- More knowledge required: with the Data Vault comes a 3rd modeling technique. Employees must master these techniques bit by bit.

- No integrity: the data in the Data Vault lacks integrity and is not always correct.

What happens after the Data Vault in the loading process?

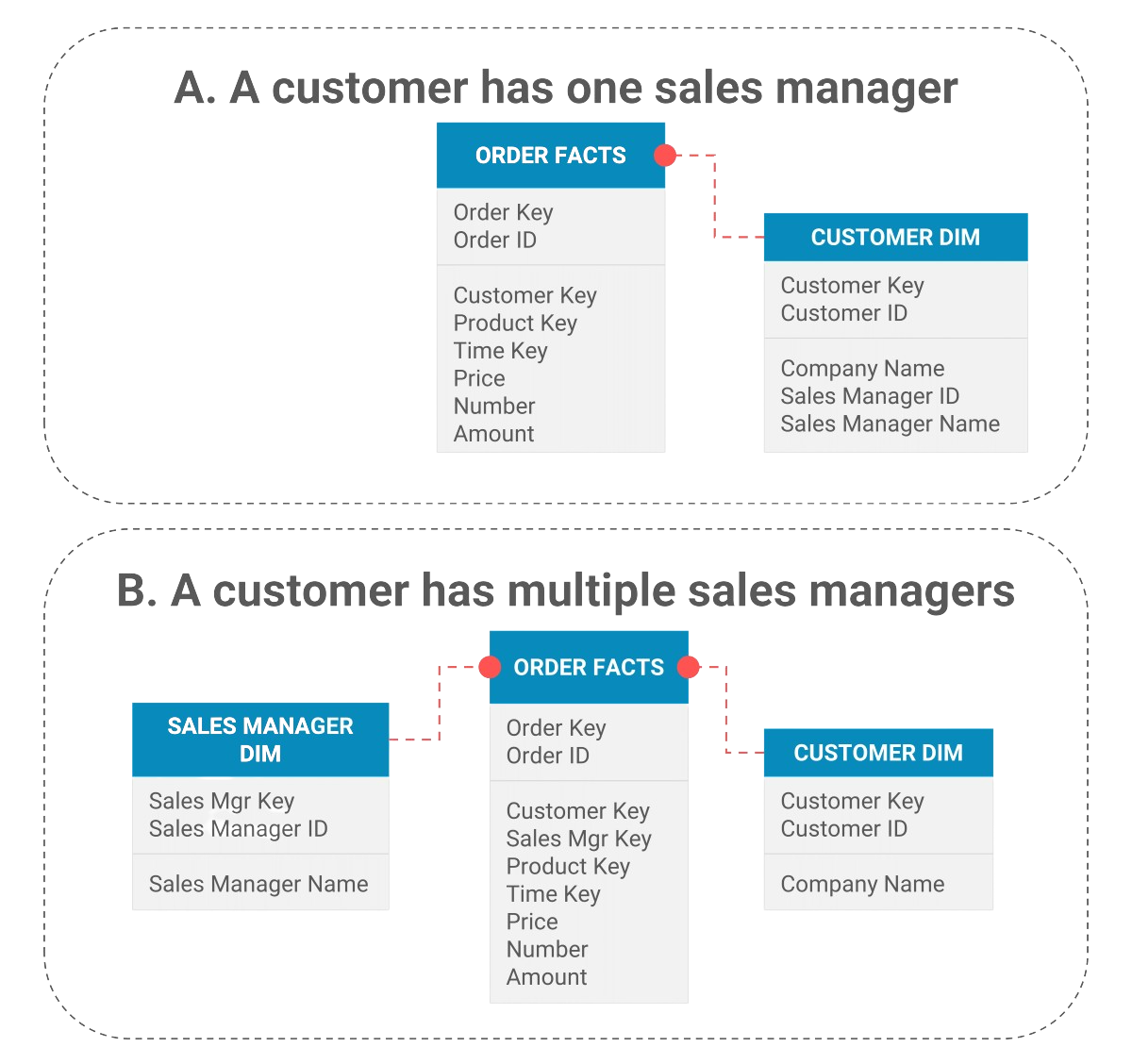

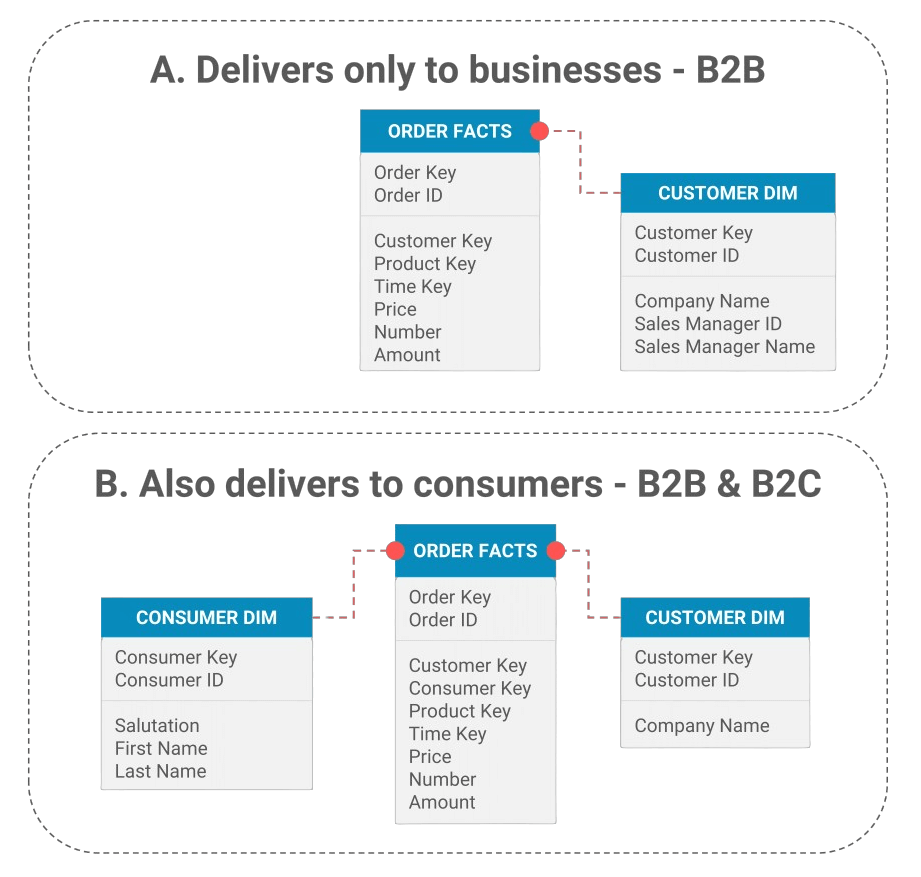

The main advantage of the Data Vault is the flexibility of data storage. In a mature DWH architecture, you need a CDW and possibly one or more data marts or cubes in addition to a Data Vault. Of course, it is wonderful when the structure changes in the source do not necessarily require the Data Vault to be “cracked open”. But this does not apply to the CDW and data marts, as these are dimensionally modeled (preferred) or 3NF. To illustrate this phenomenon, we give two examples.

By the way, you can also model the change regarding sales managers and consumers in a slightly different way but that doesn’t matter for the examples.

Data Warehouse and Data Governance Training

Elevate your data strategy with our intensive 3-day in-company Data Warehouse & Data Governance training. Gain practical tools and insights to conquer data challenges, from data quality to privacy compliance. Become a data warehouse expert and make informed decisions. Contact us now to secure your spot!

Elevate your data strategy with our intensive 3-day in-company Data Warehouse & Data Governance training. Gain practical tools and insights to conquer data challenges, from data quality to privacy compliance. Become a data warehouse expert and make informed decisions. Contact us now to secure your spot!

Source changes always lead to modification of data marts

Both examples show that even with a Data Vault it is necessary to adjust the data model of the CDW/data marts when the structure of the sources changes. What does this mean for the existing data in the CDW/data marts? The CDW can then either be completely rebuilt from the Data Vault (ETL/script modifications are necessary). Or a conversion ETL needs to be written that converts existing data from the CDW to the new structure and content.

Is the story of reloadability even true?

So the advantage of reloadability does not seem to be there. After all, in many, but not all, cases a conversion can suffice. In addition, a conversion can mark fact records from before the conversion. So that you can also determine (in retrospect) when the change took effect.

Situations in which a Data Vault has added value

- From the point of view of compliance and traceability, a Data Vault can offer added value. After all, there is an accurate record of when a data element enters the DWH environment from which source system.

- When the structure of source systems changes frequently to very frequently, or the organization operates in a very dynamic environment, a Data Vault can offer added value.

- When a lot of accountability information needs to be generated with fixed reports. A so-called Business Data Vault (which has the role of CDW) can then suffice, without data marts, in which only correct data is loaded. The BI tool retrieves these directly from the Business Data Vault.

Figure 4: data model of the DWH before the change in the source is made (situation A: a customer has 1 sales manager) and the data model after the source is changed (situation B: multiple sales managers per customer)

Figure 4: data model of the DWH before the change in the source is made (situation A: a customer has 1 sales manager) and the data model after the source is changed (situation B: multiple sales managers per customer) Figure 5: Data model of the data warehouse before the change in the source occurs (situation A: only companies) and the data model after the source is changed (situation B: also consumers can now be served)

Figure 5: Data model of the data warehouse before the change in the source occurs (situation A: only companies) and the data model after the source is changed (situation B: also consumers can now be served)

Responses

Daan,

4 years later, I’m wondering if your outlook on Data Vault has changed.

What are your thoughts on Data Vault now? Given so many advances recently, Delta Lake for example, is there a need to do up front modelling such as DV?

I wasn’t a fan of the Data Vault anyway because it’s a huge burden on a BI project where you just want to give valuable insights to the users and to improve decision making on a daily basis. With new technology available now, like Microsoft Fabric, I’m even more against it. You need very good arguments to defend such a data modelling technique and extra component in your BI architecture.